Designing Trust-Calibrated STT Model Selection for Language Analysts

Research-driven UX / XUI Design · LAS-funded Team Project

Project TypeYearJan - Dec 2025

DimensionsLocationRaleigh,NC

1280 * 832 px

My RoleResearch Assistant / literature review / precedent analysis / UI design / prototyping / scenario videos/ visual system development

Software and techniques usedFigma – interface architecture, mid- to high-fidelity prototyping, layered explanation UI design, and interaction flow mapping

After Effects – scenario-based motion studies and interaction simulation for analyst workflows

Illustrator / Photoshop – system diagrams, model comparison visuals, and supporting interface assets

Project DescriptionThis project investigates how explainable user interfaces can support language analysts in selecting the most appropriate Speech-to-Text (STT) model within increasingly complex analytic workflows. The work was conducted as a year-long, research-driven team project in collaboration with the Laboratory for Analytic Sciences (LAS), a translational research lab that brings together government, academic, and industry partners to advance intelligence analysis and national security.

Within the LAS research environment, our team explored how explainable AI (XAI), human–AI collaboration, and interface design can bridge the gap between advanced machine learning systems and the real-world needs of non-technical domain experts. The project focuses on anticipating a future scenario in which analysts have access to a vast and specialized toolkit of STT models, and where effective model selection becomes a critical part of everyday analytic work.

UX/UI DesignDesign Problem

Advances in speech recognition have led to a rapid expansion of specialized STT models, each optimized for different audio conditions, languages, and analytic goals. While this diversity offers analysts greater technical power, it also introduces a new strategic challenge: choosing the right model for a specific audio file quickly, confidently, and repeatedly. Language analysts are experts in content, context, and interpretation, rather than in machine learning, and current systems often assume a level of technical literacy that does not align with real-world workflows.

As STT ecosystems grow, model selection risks becoming a bottleneck rather than an enabler. Without clear guidance, analysts may rely on trial-and-error, default models, or opaque system scores, leading to inefficiency, mistrust, and cognitive overload.

Design Challenge

How might an explainable user interface help language analysts confidently select the most appropriate STT model—without increasing cognitive overload?

This challenge reframes model selection as a workflow-integrated decision, rather than a separate technical task. Instead of asking analysts to understand machine learning internals, the interface must surface just enough explanation, at the right moment, to support action. The goal is not maximal transparency, but calibrated trust, allowing analysts to remain agile as they move between tasks, audio conditions, and analytic goals.

Design Process

This project followed an iterative, research-driven design process that integrates human-centered design with principles from Explainable AI and human–AI collaboration. Rather than treating interface design as a linear sequence of steps, our process moved fluidly between understanding analyst workflows, framing decision challenges, exploring alternative interaction models, and validating design choices through feedback and evaluation.

This end-to-end process ensured that each design decision, particularly regarding explainability and trust calibration, was continuously informed by user needs and real-world analytical constraints, ultimately leading to a layered explanation system aligned with analysts’ workflows rather than abstract model logic.

Key Insight: Layered Explanations Support Understanding, Transparency, and Trust

This approach aligns with prior work distinguishing between local explanations, which justify a recommendation in the user’s current context, and global explanations, which reveal broader system behavior and logic. Research suggests that trust and acceptance increase when high-level system understanding is paired with situational, task-specific reasoning. For our project, layered explanations emerged as a way to maintain transparency without overwhelming analysts, enabling them to engage with the system at a depth appropriate to their role, experience, and workflow moment.

Research Foundation

We surveyed commercial and research-based STT tools to understand how models are currently presented, compared, and explained, and identified a consistent gap between system-level metrics and the needs of analysts. In parallel, we conducted a literature review focused on XAI trends, recommendation systems, and interpretability for non-technical users, with particular attention to how explanations influence trust and adoption.

Across these sources, the insights were that explanation effectiveness is not only about technical depth, but also about structure, timing, and relevance within the user’s task context.

From Insight to Interface Structure

To operationalize this insight, we translated the What–Why–How explanation framework into a structured UI aligned with the STT model selection workflow. The interface presents recommendations at multiple levels: a glanceable, plain-language summary of suggested models; an intermediate layer that explains task-relevant factors, such as audio characteristics and model strengths; and an optional, deeper layer that exposes technical logic and performance metrics for users seeking validation. Early abstractions helped validate the layered explanation structure before applying it to STT-specific scenarios, resulting in an interface where explanations remain contextual, scalable, and embedded within analysts’ existing workflows rather than interrupting them.

Persona & User Journey map

Concept Exploration & Early Feedback

Low-Fidelity Exploration

Early design exploration focused on brainstorming how three-level explanations could be embedded within the existing analyst workflow. We experimented with multiple visualization strategies, interaction patterns, and placements for model recommendations and explanations, testing how different forms of transparency affected speed and clarity.

Idea One - List-Based

When users are working with a model, the system automatically suggests alternatives based on performance and community usage data, presented in a concise list format.

Idea Two - Visual Comparison

The system visually compares the current model with top alternatives across key performance metrics, helping users quickly identify strengths and weaknesses.

Idea Three - Task-Focused Expansion

Based on the user’s current activity, the system suggests not only better models, but also potentially more suitable tasks, supporting forward-thinking exploration beyond their initial query.

Early Feedback and Design Pivot

Initial feedback revealed that some visualization styles, particularly radar charts and dense comparative graphics, were challenging for analysts to quickly interpret. Rather than increasing clarity, these representations introduced friction and cognitive strain. Analysts consistently preferred familiar, readable patterns that supported rapid scanning and comparison.

This feedback prompted a key pivot: instead of emphasizing novel visual complexity, the design prioritized legibility, restraint, and task alignment, demonstrating how research insights directly reshaped the interface direction.

Mid-Fidelity Design Directions

At the mid-fidelity stage, the design evolved into two complementary interaction models that explore different balances between system guidance and analyst control. These directions reflect how explainability, agency, and trust can be distributed differently depending on workflow needs.

Direction One: System-Guided Model Discovery

This direction emphasizes automation and system initiative. The interface detects key audio characteristics from the selected audio cut and presents a ranked list of STT model recommendations. Suggestions are organized into two sources: system-generated recommendations based on model performance and audio analysis, and community-informed recommendations derived from peer usage patterns. Analysts can quickly preview, compare, and run models with minimal interaction, making this approach well-suited for time-sensitive tasks and rapid triage scenarios.

Direction Two: Analyst-Guided Model Exploration

This direction prioritizes analyst agency and interpretability. Instead of relying on automated detection, analysts explicitly indicate the audio characteristics they observe, such as background noise, cadence issues, or semantic inconsistency, using a selectable criteria panel. Based on these inputs, the system returns a tailored set of STT model recommendations, visualized through comparable model cards. Community notes and analyst feedback are surfaced as trust signals, allowing users to validate recommendations through real-world usage context. This approach supports deliberate decision-making and deeper model understanding.

Survey & Evaluation

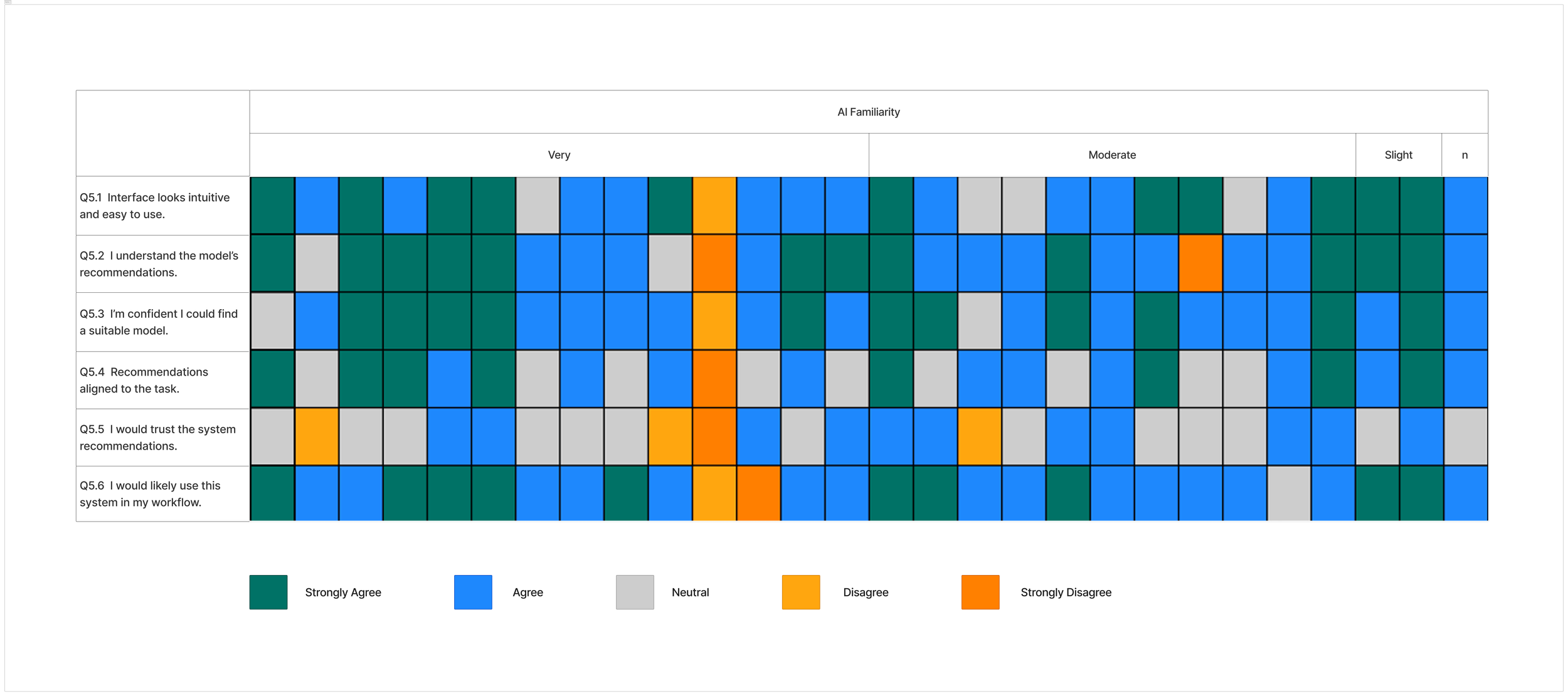

To evaluate the mid-fidelity design directions, we conducted a qualitative survey with 28 language analysts across varying levels of seniority, including senior, intermediate, and junior practitioners. Participants also reported varying levels of AI familiarity, enabling us to investigate how experience and technical comfort affected explanation needs and trust calibration. The primary goal of this study was to understand how analysts interpret STT model recommendations, what explanation strategies they rely on when making model selections, and how these preferences vary across expertise levels.

We first analyzed participant distribution by seniority and AI familiarity to establish context for the findings. Overall responses indicated strong agreement that the interface was intuitive, that recommendations were understandable, and that analysts felt confident locating a suitable model for their task. These results suggested that the core interaction patterns and information structure were viable, validating the design direction before deeper refinement.

Beyond general usability feedback, the survey results showed a strong overall positive response to the proposed interface direction. Across seniority levels and varying degrees of AI familiarity, most participants agreed that the interface felt intuitive, that the recommendations were understandable, and that they felt confident identifying an appropriate STT model for their tasks. These responses suggested that the core interaction model and information structure were not only usable but also aligned with analysts’ real-world workflows, establishing a solid foundation for further refinement.

Within this generally positive reception, a clear pattern emerged in how analysts evaluated and trusted model recommendations. Rather than relying solely on system-generated scores or abstract performance indicators, participants consistently emphasized the importance of community-validated signals, such as peer usage patterns, task appropriateness, and experiential feedback. Trust, in this context, appeared to be socially and contextually grounded, shaped by how other analysts had successfully used a model in similar situations. These themes surfaced repeatedly across different experience levels, indicating shared decision-making challenges rather than isolated preferences.

The following three quotes represent especially clear and representative examples of this feedback. Together, they capture the core concerns that guided the next phase of design iteration and directly informed how we refined explanation depth, community signals, and model comparison in the interface.

Senior Analyst P10

[…] if there is user feedback to crowdsource [….] wouldn't that ALWAYS trump the system suggestions? Users are going to be more influenced by other users rather than a nebulous “system suggestion…”

—Calibrating Trust

Intermediate Analyst P05

The ability to immediately jump to keywords and have a provided STT transcript is an incredibly valuable tool that would speed up my workflow considerably [….] to filter through audio much more quickly than I am able to now.

— Improving Tools

Senior Analyst P19

Model description & tags are helpful but don't explain how audio is processed [….] would be helpful to have examples of original vs processed clip & what the model is NOT good at [….] too many options can make the selection difficult.

— Explanation Needs

Hi-Fi Design: Layered Explanation System

The final high-fidelity design reflects a direct response to survey feedback and qualitative insights from language analysts. Rather than adding more metrics or visual complexity, we refined the interface around three core directions. First, transparency is achieved through a three-level explanation structure that supports learning without overwhelming users, allowing analysts to understand system reasoning at varying depths. Second, adaptability ensures that explanations, previews, and comparisons align with real-world analyst workflows—such as quickly previewing transcripts, comparing model outputs, and validating decisions in context. Finally, trust is reinforced through collaboration, by integrating community usage insights and peer feedback alongside system recommendations. Together, these refinements shift the interface from a static recommendation tool to a workflow-aware, trust-calibrated system that supports confident STT model selection in practice.

Scenario Video

To demonstrate the system in context, we developed a scenario centered on Riley, a fictional language analyst working at the Nixon Historical Audio Task Force. Riley is piloting a new suite of specialized STT models to identify conversations related to the Apollo space missions. Through a series of scenario videos, we show how layered explanations support model selection under real workflow pressure, without disrupting analytic momentum.

XUI Demo Video - Level 1 (Glanceable explanations)

Prioritize plain-language, task-specific recommendations.

XUI Demo Video - Level 2 (Intermediate)

Synthesizes technical data with community input.

XUI Demo Video - Level 3 (Technical)

Tailored for technical-oriented users requiring granular metrics (e.g. Word Error Rate, training data, etc.)

Adaptability to Real-World Analyst Workflows

The system is designed to adapt to how analysts actually work, not how models are abstractly evaluated. Integrated preview interactions—such as transcript sampling, audio playback, and side-by-side comparisons—allow analysts to validate recommendations within their existing audio analysis flow. By embedding model selection directly into the audio player and task context, the interface minimizes disruption and supports rapid, situational decision-making.

Key UI & Interaction Highlights

The interface is designed to support confident, efficient STT model selection by aligning explainability, interaction, and trust with analysts’ real-world workflows. Rather than presenting a single fixed interaction model, the system emphasizes progressive disclosure, contextual adaptation, and community-informed signals. Together, these elements ensure that the interface remains understandable for new users, efficient for experienced analysts, and trustworthy across different levels of task complexity.

Trust through Community-Informed Collaboration

Trust is further reinforced by incorporating community usage insights alongside system recommendations. Signals such as peer usage frequency, task-specific success patterns, and qualitative feedback provide analysts with socially grounded context for decision-making. Rather than relying solely on algorithmic scores, the interface surfaces collective experience, helping analysts calibrate trust through both system intelligence and community knowledge.

Transparency through Layered Explainability

To support learning without overwhelming users, the interface employs a three-level explanation structure that unfolds on demand. At a glance, analysts see plain-language recommendations and key suitability signals. As needed, they can expand explanations to understand why a model is suggested and, at the deepest level, how it performs based on technical metrics and training context. This layered approach allows transparency to scale with user intent, supporting both quick decisions and deeper verification.

Design Insights & Takeaways

This project offers a framework for designing trustworthy AI recommendation systems in complex professional domains. First, explanations must be layered to support both generalists and experts without privileging one over the other. Second, trust is calibrated through social intelligence—analysts consistently rely on peer-validated experience over abstract system metrics. Finally, explainable interfaces must be responsive, dynamically matching explanation depth to the analyst’s immediate workflow needs. Together, these principles help accelerate audio analysis and lay the groundwork for meaningful, long-term analyst–AI collaboration.